10 Reasons Why GenAI Projects Fail

- and How Enterprise Architects Can Fix Them

by Daniel Lambert

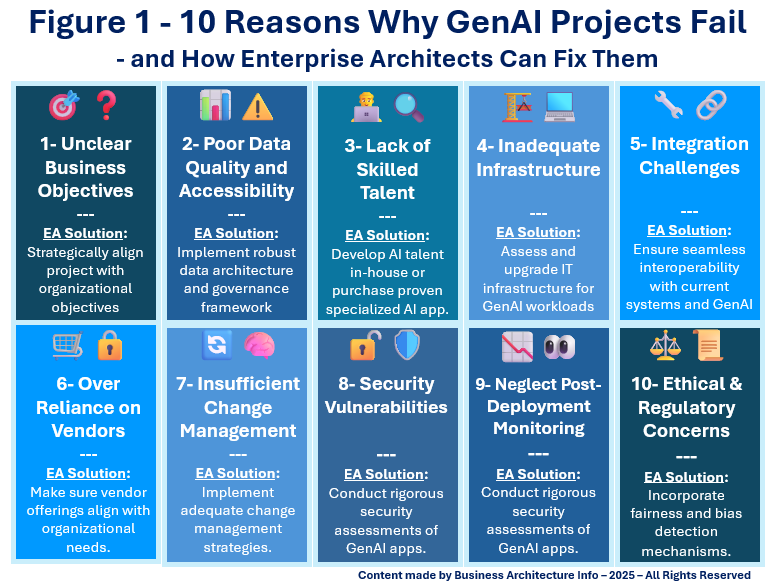

Generative AI (GenAI) holds immense promise for enterprise transformation, yet many initiatives falter due to common pitfalls. Enterprise Architects (EAs) are uniquely positioned to navigate these challenges by aligning GenAI projects with strategic objectives, ensuring robust data governance, and fostering cross-functional collaboration. By proactively addressing issues such as unclear goals, data quality concerns, and integration complexities, as shown in Figure 1 above, EAs can steer GenAI initiatives toward successful outcomes.

1. Unclear Business Objectives

Many GenAI projects begin without clearly defined objectives, leading to misaligned expectations, inefficient resource allocation, and suboptimal outcomes. This lack of clarity often results in teams pursuing solutions that don't align with business needs, causing wasted efforts, budget overruns, and stakeholder dissatisfaction. To mitigate these risks, it's crucial to establish specific, measurable, achievable, relevant, and time-bound (SMART) goals at the project's outset. By doing so, organizations can ensure that GenAI initiatives are purpose-driven, strategically aligned, and positioned for success.

Furthermore, setting clear objectives facilitates better communication among cross-functional teams, ensuring that everyone understands the project's purpose and their role in achieving it. It also provides a framework for evaluating progress and making informed decisions throughout the project lifecycle. Without well-defined goals, GenAI projects risk becoming aimless endeavors that fail to deliver meaningful value to the organization.

EA Solution: EAs should collaborate with stakeholders to establish clear, measurable objectives that align with the organization's strategic vision and goals. Utilizing frameworks like TOGAF can help ensure that GenAI initiatives support business capabilities and deliver tangible value.

2. Poor Data Quality and Accessibility

GenAI models rely heavily on high-quality, well-structured data to function effectively. When the data used for training is incomplete, inconsistent, or outdated, it can significantly compromise the model's performance and reliability. For instance, a GenAI system trained on flawed data may produce outputs that are inaccurate or misleading, leading to poor decision-making and potential reputational damage. Ensuring data accuracy, consistency, and relevance is therefore paramount to the success of any GenAI initiative.

Moreover, poor data quality can lead to issues such as model hallucinations, where the AI generates information that is plausible sounding but incorrect or nonsensical. This not only undermines user trust but can also have serious implications in critical applications like healthcare, finance, or legal services. To mitigate these risks, organizations must implement robust data governance frameworks, including regular data audits, validation processes, and the use of tools for data cleansing and enrichment. By prioritizing data quality, enterprises can enhance the reliability and effectiveness of their GenAI applications.

EA Solution: Implement robust data governance frameworks to cleanse, standardize, and manage data effectively. EAs should ensure that data architecture supports the needs of GenAI models, facilitating access to reliable datasets.

3. Lack of Skilled Talent

A shortage of professionals skilled in AI and machine learning can significantly hinder project development and deployment. This talent gap often leads to delays, increased costs, and suboptimal implementation of AI initiatives. Organizations may struggle to find qualified candidates, resulting in prolonged recruitment processes and potential compromises in project quality. The scarcity of skilled AI professionals can also limit innovation and the ability to leverage AI technologies effectively, impacting overall competitiveness.

EA Solution: Either develop talent acquisition and retention strategies focused on AI expertise or purchase a proven and specialized AI agent application from a vendor. Enterprise Architects (EAs) can lead this by aligning AI initiatives with business objectives, ensuring data readiness, and establishing robust governance frameworks. Their role is pivotal in integrating AI solutions that are scalable, secure, and compliant with organizational standards.

4. Inadequate Infrastructure

Generative AI models are highly resource-intensive, requiring vast computational power and storage. Training large-scale models like GPT-4 involves processing enormous datasets with billions of parameters, necessitating thousands of high-performance GPUs or TPUs. This leads to significant energy consumption, with data centers experiencing increased power demands. Additionally, these models demand advanced storage systems to manage and process the massive data volumes involved.

EA Solution: Assess and upgrade the IT infrastructure to support GenAI workloads. EAs should consider leveraging cloud-based solutions to scale resources as needed, ensuring that infrastructure aligns with the demands of GenAI applications.

5. Integration Challenges

Integrating GenAI solutions into existing enterprise systems presents significant challenges that can impede adoption and scalability. Legacy infrastructures often lack compatibility with modern AI technologies, requiring substantial modifications to accommodate GenAI applications. This integration complexity can lead to increased costs, extended timelines, and potential disruptions to business operations. Moreover, the absence of standardized interfaces and protocols further complicates the seamless incorporation of GenAI into established workflows, hindering the realization of its full potential.

EA Solution: Promote cross-functional collaboration between AI teams and IT departments. EAs should develop integration strategies that ensure seamless interoperability with current systems, facilitating the smooth deployment of GenAI solutions.

6. Overreliance on Vendors

Overreliance on third-party vendors for generative AI solutions—without sufficient internal understanding—can lead to misaligned implementations, security vulnerabilities, and compliance risks. This dependency may also result in operational disruptions, intellectual property concerns, and reputational damage if vendors fail to meet performance or ethical standards. Establishing robust internal oversight and risk management frameworks is essential to mitigate these challenges.

EA Solution: Build internal competencies to critically assess and manage vendor solutions. EAs should ensure that vendor offerings align with organizational needs and standards, maintaining control over GenAI initiatives.

7. Insufficient Change Management

Resistance to change and a lack of user adoption can derail GenAI initiatives. Employees may fear job displacement, distrust AI outputs, or feel overwhelmed by unfamiliar technologies, leading to skepticism and disengagement. Without proactive change management, these concerns can stall adoption and diminish the return on AI investments.

EA Solution: Implement comprehensive change management strategies. EAs should engage stakeholders early, communicate benefits clearly, and provide training to facilitate adoption, fostering a supportive environment for GenAI integration.

8. Security Vulnerabilities

Introducing GenAI solutions can expose organizations to new security risks. These include prompt injection attacks, where adversaries craft inputs that manipulate AI behavior, leading to unintended actions or data leakage. Additionally, GenAI systems can inadvertently expose sensitive information if not properly secured, posing significant privacy concerns.

EA Solution: Conduct thorough security assessments of GenAI applications. EAs should integrate security measures throughout the development lifecycle to mitigate risks, ensuring the protection of sensitive data and systems.

9. Neglecting Post-Deployment Monitoring

Failing to monitor GenAI models after deployment can lead to performance degradation and unforeseen issues. Over time, models may encounter data drift, where the statistical properties of input data change, leading to decreased accuracy and reliability. Additionally, GenAI systems can exhibit behaviors like hallucinations, producing outputs that are plausible but incorrect, if not continuously evaluated. Neglecting post-deployment monitoring risks eroding user trust and compromising the effectiveness of AI-driven solutions.

EA Solution: Establish ongoing monitoring and maintenance protocols. EAs should use Machine Learning Operations practices to ensure models remain effective and relevant over time, adapting to changing data and business needs.

10. Ethical and Regulatory Concerns

Generative AI projects frequently confront ethical dilemmas and regulatory scrutiny, particularly regarding data privacy and algorithmic bias. These challenges can impede adoption and scalability if not proactively addressed. Ensuring compliance with evolving regulations, such as the European Union's AI Act, and implementing robust ethical frameworks are essential for responsible deployment.

EA Solution: Establish ethical guidelines and compliance protocols for AI development. EAs should incorporate fairness and bias detection mechanisms to uphold ethical standards, ensuring that GenAI applications adhere to legal and societal expectations.

The successful deployment of GenAI projects hinges on a comprehensive approach that encompasses clear objective setting, data integrity, skilled talent, and effective change management. Enterprise Architects play a pivotal role in orchestrating these elements, bridging the gap between technological innovation and business value. By leveraging their expertise, EAs can transform GenAI from a conceptual promise into a tangible asset that drives organizational growth and competitiveness.